はじめに

参考

- https://cloud.google.com/nodejs/docs/reference/speech/latest

- https://cloud.google.com/docs/authentication/application-default-credentials?hl=ja

- https://cloud.google.com/speech-to-text/docs/troubleshooting

- https://cloud.google.com/speech-to-text/docs/speech-to-text-requests?hl=ja

- https://cloud.google.com/speech-to-text/docs/encoding

生成物

- Remixのフロントで、ブラウザのWeb APIの

MediaDevicesを使用して音声を録音 - RemixのバックエンドのAPIにファイルを送信し、Google STT APIでtranscrtiption(書き起こし)を作成

- RemixのフロントにAPIの結果としてtranscrtiptionを返し、ブラウザに表示

準備

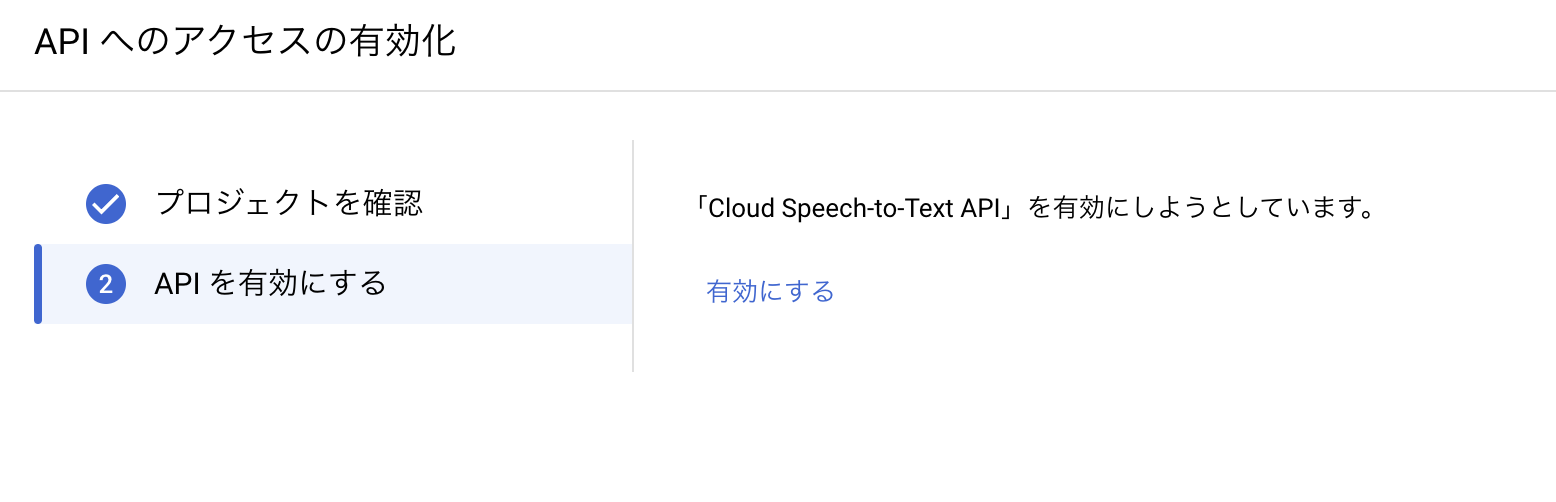

Cloud Speech-to-Text APIを有効にする

アプリケーションのデフォルト認証情報(ADC)の設定

方法はいくつか存在するが以下で行った

- GCPのIAMにCloud Speechへの権限を付与

- 環境変数 GOOGLE_APPLICATION_CREDENTIALSで、GCPにアクセスするIAM情報を含んだjsonファイルの場所を指定する

- .zshrcで、GOOGLE_APPLICATION_CREDENTIALSを読み込み

生成物の説明

フロントページ

import React, { useState, useRef, useEffect } from 'react';

import styles from "~/styles/index.module.css";

export function links() {

return [];

}

const AudioRecorder: React.FC = () => {

const [isRecording, setIsRecording] = useState(false);

const [mediaRecorder, setMediaRecorder] = useState<MediaRecorder | null>(null);

const [transcription, setTranscription] = useState("");

const audioChunks = useRef<Blob[]>([]);

const startRecording = async () => {

console.log("startRecording")

const stream = await navigator.mediaDevices.getUserMedia({ audio: true });

const recorder = new MediaRecorder(stream);

setMediaRecorder(recorder);

recorder.ondataavailable = (event: BlobEvent) => {

audioChunks.current.push(event.data);

};

recorder.start();

setIsRecording(true);

};

const stopRecording = async () => {

console.log("stopRecording")

if (!mediaRecorder) return;

mediaRecorder.stop();

setIsRecording(false);

mediaRecorder.onstop = async () => {

const audioBlob = new Blob(audioChunks.current, { type: 'audio/webm' });

const formData = new FormData();

formData.append('file', audioBlob);

const res = await fetch('/api/upload-audio', {

method: 'POST',

body: formData,

});

const json = await res.json();

if(json && 'transcription' in json){

setTranscription(json.transcription);

}

audioChunks.current = []; // Reset audio chunks

};

};

useEffect(() => {

console.log("Transcription updated:", transcription);

}, [transcription]);

return (

<div className={styles.container}>

<button

className={isRecording ? styles.stopButton : styles.startButton}

onClick={isRecording ? stopRecording : startRecording}

>

{isRecording ? 'Stop Recording' : 'Start Recording'}

</button>

<textarea className={styles.transcriptionTextarea} value={transcription} readOnly />

</div>

);

};

export default AudioRecorder;バックエンドAPI

import { json, ActionFunction } from '@remix-run/node';

import { SpeechClient } from '@google-cloud/speech';

import fs from 'fs';

export const action: ActionFunction = async ({ request }) => {

const formData = await request.formData();

const file = formData.get('file') as Blob;

if (!file) {

return json({ error: 'No file uploaded' }, { status: 400 });

}

const arrayBuffer = await file.arrayBuffer();

const buffer = Buffer.from(arrayBuffer);

fs.writeFile('./output/video.webm', buffer, () => console.log('video saved!') );

const audioBytes = Buffer.from(arrayBuffer).toString('base64');

const client = new SpeechClient();

const [response] = await client.recognize({

config: {

encoding: 'WEBM_OPUS',

sampleRateHertz: 48000,

languageCode: 'en-US',

},

audio: {

content: audioBytes,

},

});

const transcription = response.results

?.map(result => result.alternatives?.[0]?.transcript)

.filter(transcript => transcript !== undefined)

.join('\n') || 'No transcription available';

return json({ transcription });

};

コマンド

% npx remix vite:build

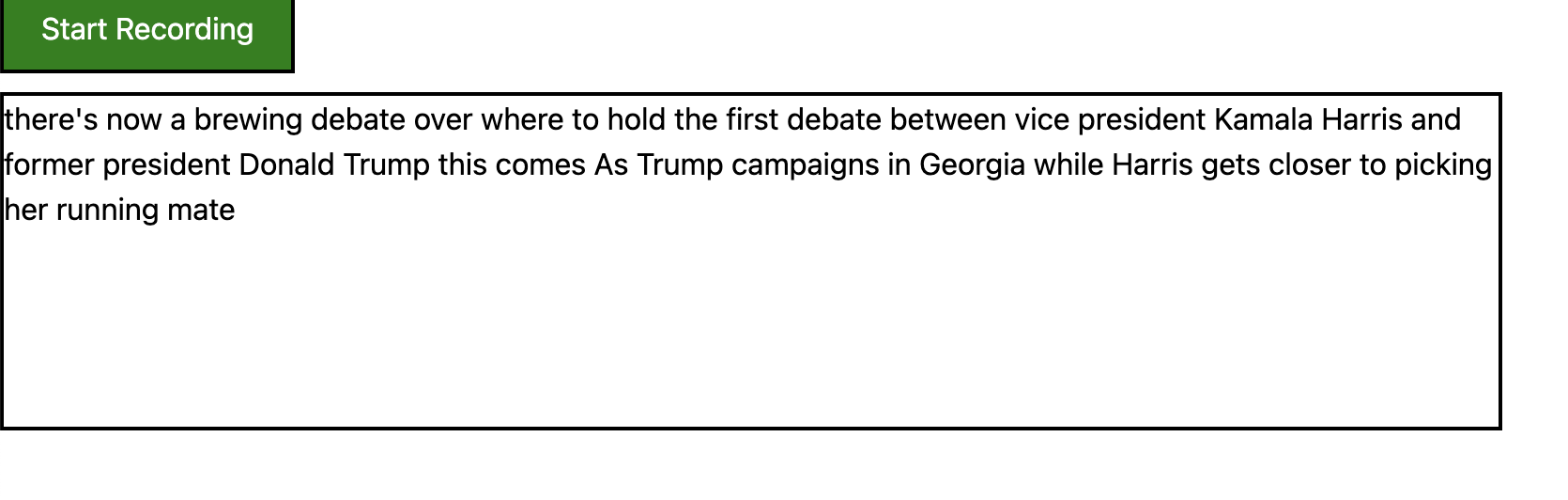

% npx remix-serve build/server/index.js結果

以下の冒頭10秒を処理

今後の検討

- 1分を超えるとエラーになる

- details: “Sync input too long. For audio longer than 1 min use LongRunningRecognize with a ‘uri’ parameter.”,